As artificial intelligence continues to evolve, generative AI stands out as one of the most transformative technologies for modern businesses. Its ability to create content, enhance the customer or employee experience, automate tasks, and provide new insights sets it apart from traditional AI. For those considering AI implementations, understanding the technological foundations of generative AI can prove beneficial. In this article, we will dive into how generative AI works and its architecture. We will conclude with a look at benefits and use cases for businesses.

What Is Generative AI?

Generative AI refers to artificial intelligence systems that can generate new content, like text, images, music, or code, based on the data on which they have been trained. Unlike traditional AI, which is often used for specific tasks, like classification or prediction, generative AI models can produce creative outputs, drawing from patterns learned from large datasets.

Examples of Generative AI include:

- Text Generation: Writing human-like text (e.g., OpenAI’s GPT-4)

- Image Generation: Producing realistic images from scratch (e.g., DALL·E)

- Music Generation: Composing original music tracks

- Code Generation: Generating code for building applications, etc.

What Are the Technical Building Blocks of Generative AI

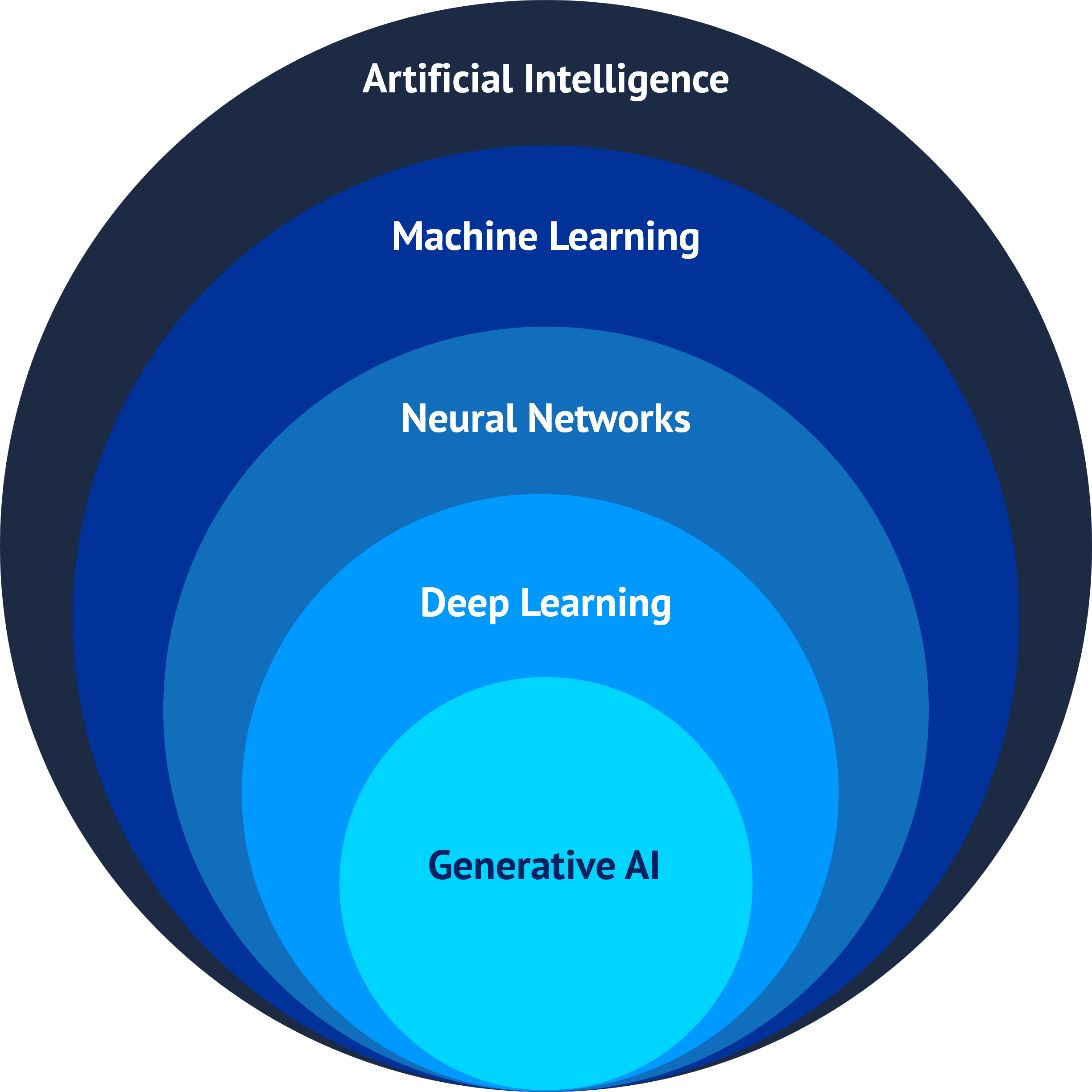

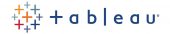

To understand how generative AI works, it is important to grasp the technical components that enable it to generate new and creative outputs. As depicted in the graphic below, there are several layers of technology upon which generative AI is built, each playing a specific role.

4 Technologies That Enable Generative AI:

1. Artificial Intelligence (AI)

Artificial Intelligence is the simulation of human intelligence in machines programmed to think and learn like humans. AI systems can perform problem-solving, decision-making, and language understanding tasks but cannot create new content.

2. Machine Learning (ML)

Machine Learning is a subset of AI that involves the use of algorithms and statistical models to enable computers to learn from and make predictions or decisions based on data. Instead of being explicitly programmed to perform a task, ML systems improve their performance as they are exposed to more data over time.

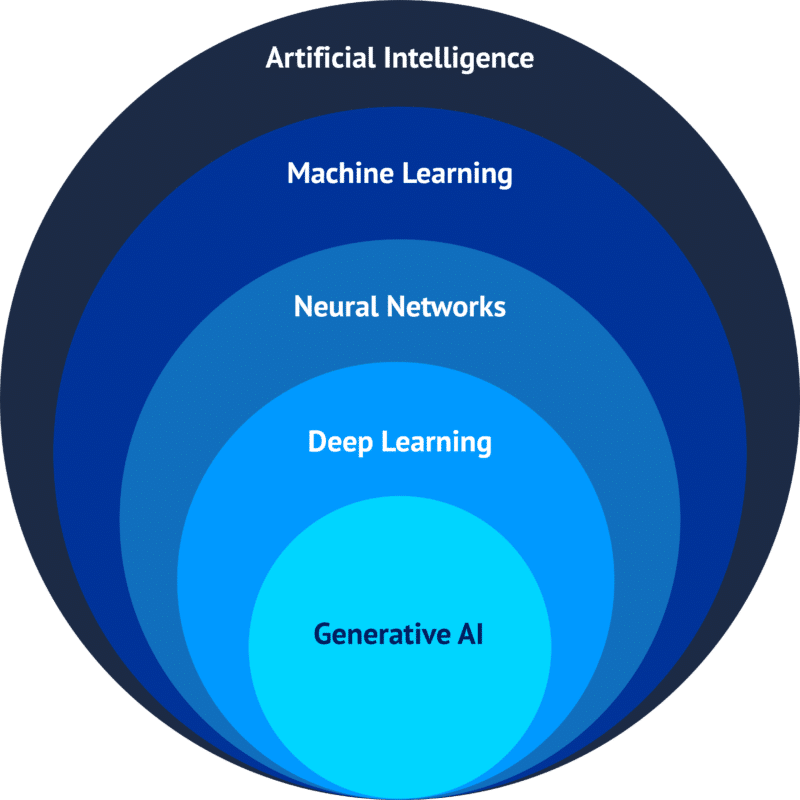

3. Neural Networks

A neural network is made up of layers of nodes that are interconnected. They mimic the way neurons in the human brain transmit information. Each node has an input layer that receives data, hidden layers where computations are performed, and an output layer that produces a final result or prediction. Weight and bias parameters are applied and adjusted to minimize output errors and activation functions are applied to the output of each node to enable the network to learn complex patterns.

4. Deep Learning

Deep learning is a specialized subset of machine learning that uses neural networks with many layers (hence “deep”) to analyze a range of factors of data. These neural networks are designed to mimic the human brain’s structure and function, allowing the system to learn and make decisions on its own.

The combination of technologies used in building a generative AI model differs based on the intended purpose of the model.

Techniques for Building Generative AI Models

A number of architectural approaches have been taken. Here are some examples:

Generative Adversarial Networks (GANs)

GANs models use two neural networks: a generator (which creates data) and a discriminator (which evaluates the quality of the data). The two networks compete, resulting in increasingly realistic outputs.

Variational Autoencoders (VAEs)

These models encode input data into a compressed format (latent space) and then decode it, generating new data that resembles the original input.

Transformers

Transformer models are excellent at natural language generation. They have been foundational in enabling tools like GPT-4 to predict the next word in a sequence accurately based on text which has been previously processed.

Transformers use a neural network technique called a self-attention mechanism to help them understand the importance of various parts of the input data. Focusing on the parts it perceives as most important, the model interprets the user’s intent and generates an output or response.

For example, to maintain coherence and context when interpreting long passages of text, the transformers will attend to relevant words or phrases. It is similar to how you or I might note keywords to help us remember information or form an appropriate response.

Training Generative AI Models

The initial training of a generative AI model is a massive undertaking, requiring a significant time and expense commitment.

Vendors train their models by having them consume large datasets including crawling web pages and ingesting books, magazines, articles, newspapers, political documents, images, and video. Then, a small army of humans are tasked with labeling the meaning of the inputs and judging the quality of the outputs generated by the model. (Think thumbs up/thumbs down.) Through this process, the model learns to generate the expected outputs.

Pre-Trained Models

A model is considered pre-trained when developers are satisfied with the quality of the outputs being generated. Pre-trained models are often used by generative AI providers to reduce the time and cost involved when developing a new model with a similar purpose to the pre-trained one.

These pre-trained models have now progressed to the point that it is common for the most recent versions to include hundreds of billions of parameters in their training. Very few organizations possess the resources to build their own generative AI models. Still, some effort is being made to create smaller models through improved training. Smaller models, that can be developed and trained more quickly, could make it practical to embed self-contained AI models in portable devices, even those that may not be able to count on a reliable internet connection.

Fine-Tuned Generative AI (also called Transfer Learning)

When developing an AI model to perform tasks in a more specialized way for a particular knowledge domain or for a particular organization, developers might use a process called fine-tuning. A pre-trained model will typically be used as a starting point for the new model, giving it a head-start on the learning process.

The model will then be fine-tuned, a process in which the model is given detailed instructions that tell it how to focus the pre-trained capabilities for the desired behavior. This might be a series of example questions with expected answers for that question (or related questions). It might also be given instructions on the expected tone of the generated text or specific format required for the generated output.

Some common use cases where fine-tuning can improve results include:

- Setting the style, tone, format, or other qualitative aspects

- Improving reliability at producing a desired output

- Correcting failures to follow complex prompts

- Handling many edge cases in specific ways

- Performing a new skill or task that’s hard to articulate in a prompt

Retrieval-Augmented Generation (RAG)

RAG is a technique that combines generative AI with retrieval systems. This method allows the AI to refer to external documents or databases during the generation process, ensuring the output is up-to-date and accurate. It is especially useful when businesses need AI to interact with proprietary data or continuously changing datasets.

Important use cases include providing chatbot access to internal company data or giving factual information from only authoritative sources.

The use of limited sets or restricted data sets as the source for generating outputs makes RAG architecture a valuable architectural approach to consider for highly regulated industries or when digital security and privacy are important concerns.

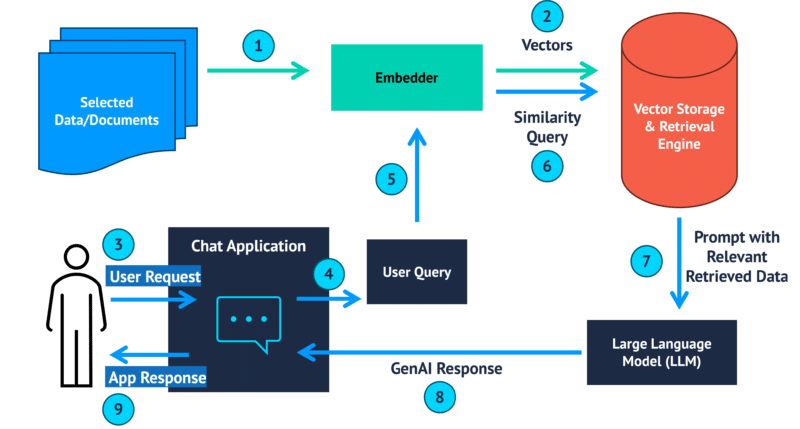

In the RAG architecture diagram above, the basic flow of information is explained as follows:

- The selected data and documents specific to a knowledge domain or organization are loaded ahead of time using an embedder. The documents are typically split into many different chunks during this process. Learn more about embedding later in this article.

- The results of the embedding process are stored in the database for later retrieval. The results of the embedding process are expressed as an array or vector of numbers as described below.

- Once the system is loaded with the proprietary information, a user then interacts with a chat application and the user provides a task or asks a question about the previously loaded documents.

- The chat application then creates a query to be used to pull data from the pre-loaded database.

- The user’s query is then passed thru the same embedding algorithm used to perform the initial load of pre-selected documents.

- The user’s query becomes a similarity query which can be used to retrieve contents from the vector database which are most related to the user’s question.

- The portions of the pre-loaded documents that seem most relevant to the user’s question or task are used to prompt the pre-trained Large Language Model (LLM).

- The LLM uses its pre-training to create the text related to the user’s question or task using the relevant data from the pre-loaded data/documents.

- The chat application presents the answer to the user.

5 Key Concepts of Generative AI

1. Prompts

When you input text into an AI tool, you are inputting a prompt. Prompts can take the form of questions, instructions, requests for output (think mathematical equations), and more.

2. Prompt Engineering

Prompt Engineering is the art of crafting queries (prompts) to elicit useful responses from AI models. Skilled prompt engineers understand how to provide the right balance of information to elicit their intended response from the generative AI model.

3. Tokenization

Before the AI can process text, the words must be broken down into smaller units called tokens. Tokenization allows the AI to handle text efficiently and learn relationships between words or parts of words.

4. Embeddings

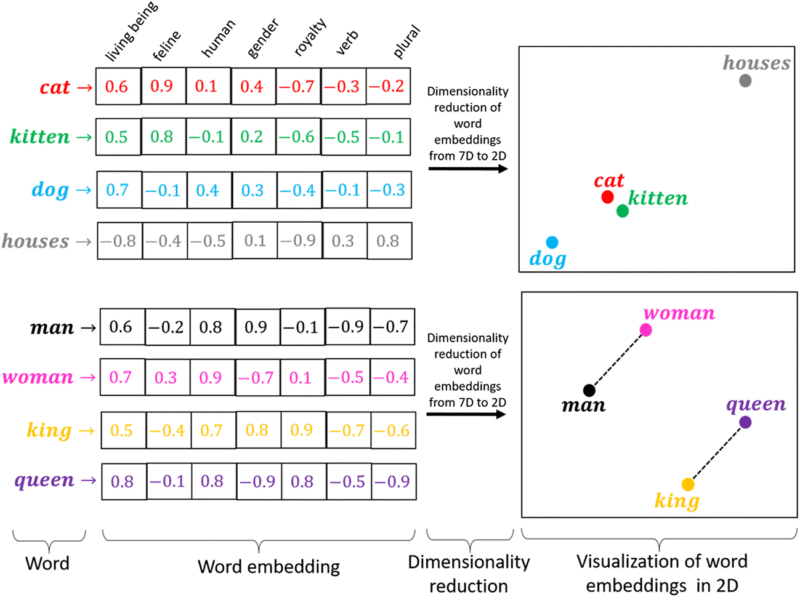

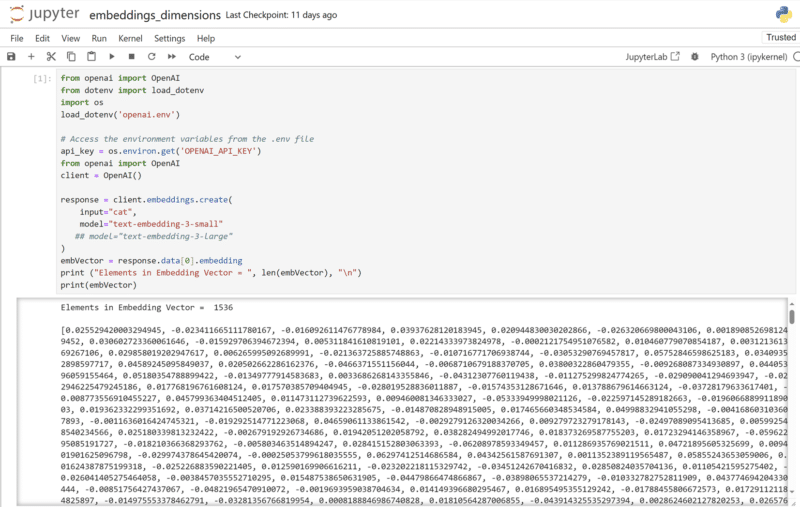

AI models do not understand words as humans do. Instead, they convert words into numerical representations called embeddings. These embeddings capture the relationships between words, such as their semantic or syntactic similarity, allowing the AI to understand the context and quantify the similarity of meaning. In my humble opinion, this is the single most important concept for a technical person to understand before trying to build a generative AI solution!

To demonstrate the complexity of embeddings, compare the two images below. The first shows a highly simplified illustration of embedding in two dimensions, which is easier for a human to consume. The second shows a more realistic generative AI implementation, the embedding for the simple English word cat. It contains an array of 1,536 floating point numbers.

5. Vector Databases

Generative AI uses vector databases to store and retrieve information in the form of multi-dimensional vectors. These vectors represent distinct characteristics or qualities of the data.

While conventional databases search for exact data matches, the primary benefit of a vector database is its ability to locate and retrieve data according to vector proximity or resemblance swiftly and precisely. This allows for searches rooted in semantic or contextual relevance rather than relying solely on exact matches or set criteria.

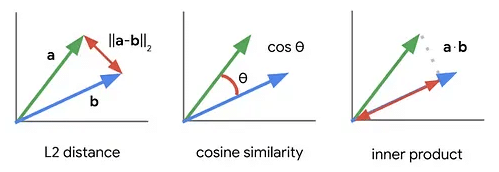

For example, to find approximate nearest neighbor, the system might use Euclidean (L2) distance, cosine similarity, or dot (inner) product. An example is shown in the image below.

What Are the Benefits of Generative AI for Modern Businesses?

Generative AI is not just a buzzword—it’s a powerful tool that can enhance operational efficiency, improve customer engagement, and unlock new revenue streams. However, strategic planning to identify the right opportunity and good data to feed your model are required to capitalize on the opportunity.

Generative AI can significantly boost operational efficiency across various business functions by:

- Automating repetitive tasks

- Streamlining workflows

- Generating insights to speed up decision making

Generative AI can revolutionize customer interactions and experiences by:

- Creating personalized customer experiences

- Enabling 24/7 customer support

- Enhancing marketing campaigns

Generative AI opens up new opportunities for businesses to generate revenue through:

- Shortening product development timelines

- Data monetization

- Improving sales processes

Businesses that leverage generative AI can gain a significant edge. McKinsey estimates that generative AI could add $2.6 to $4.4 trillion annually to the global economy through increased productivity.

Are you ready to integrate generative AI into your business strategy? Contact us for generative AI solutions tailored to your specific needs and stay ahead of the competition.

This blog post was created with the assistance of generative AI